Good news: your notebook probably comes with a GPU (e.g. MacBook Pro, Lenovo ThinkPad, etc.) Running some experiments with Scala using your GPU should be easy, but the libraries are evolving fast and documentation doesn’t always keep up. Because the tutorials I found didn’t work for me right off the bat, I decided to post the sequence of steps that worked for me here (on MacOS).

- install scala 2.9

brew install scala29 - make sure you’re running scala 2.9

scala -version - create a file called build.sbt

scalaVersion := "2.9.2" resolvers += "Sonatype OSS Snapshots Repository" at "http://oss.sonatype.org/content/groups/public/" resolvers += "NativeLibs4Java Repository" at "http://nativelibs4java.sourceforge.net/maven/" libraryDependencies += "com.nativelibs4java" % "javacl" % "1.0.0-RC2" // force latest JavaCL version libraryDependencies += "com.nativelibs4java" % "scalacl" % "0.2" autoCompilerPlugins := true addCompilerPlugin("com.nativelibs4java" % "scalacl-compiler-plugin" % "0.2") - Create a test class

import scalacl._ import scala.math._ object Test { def main(args: Array[String]): Unit = { implicit val context = Context.best // prefer CPUs ? use Context.best(CPU) val rng = (100 until 100000).cl // transform the Range into a CLIntRange // ops done asynchronously on the GPU (except synchronous sum) : val sum = rng.map(_ * 2).zipWithIndex.map(p => p._1 / (p._2 + 1)).sum println("sum = " + sum) } } - Run the test class with sbt

SCALACL_VERBOSE=1 sbt "run-main Test"

Sources:

Technology executive John De Goes points out that the term “big data” has been abused for overselling just about any data product, and indicates new, more instructive terms that the industry should focus on:

As the industry matures, there won’t be a single term that replaces the big data moniker. Instead, different tools and technologies will carve out different niches, each more narrowly focused and highly specialized than the universal sledgehammer that was big data.

The list includes:

- Predictive Analytics: employing advanced techniques in statistics, machine learning, pattern recognition, data mining, modeling, natural language processing, and other fields to identify and exploit patterns.

- Smart Data: heavily relying on predictive analytics to monetize large volumes of data

- Data Science: extract meaning from large amounts of data to create new data products — e.g. employing predictive analytics

- NewSQL: highly-scalable distributed SQL systems, a child of the good old RDBMS with the new and rebellious NoSQL.

- Other trends: streaming analytics, NLP, multimedia mining, in-memory storage and computing grids, and graph databases.

Source: http://venturebeat.com/2013/02/22/big-data-is-dead-whats-next/#Q0cy9KflZG0THHXJ.99

If you want to learn about Big Data or influence its future in Europe and beyond, come join us in the discussion at the Big Data Public Private Forum project.

- Ask the right questions

- In computing: garbage in garbage out. In Analitycs: we will not get the right answers if we don’t ask the right questions.

- Example: LinkedIn switching from measuring accuracy of interviewer to optimizing tension between releasing weak candidates early and not missing the good ones.

- Is asking the right questions an art or a science?

- Practice good data hygiene

- Given enough data and computational resources, you can find anything.

- Example: if a NFC team wins the Super Bowl, stock market goes up (with 80% accuracy).

- Correlation is not causality.

- Separate hypothesis generation from hypothesis testing.

- Don’t argue when you can experiment

- Recent research indicates that reasoning may exist not for the purpose of finding the truth, but as a way of persuading people.

- Example: Amazon offering product recommendations at checkout. Initially knocked down by instinct; later shown to be positive in experiments, and after implemented drives 5% of Amazon’s business for recommendations.

- Why argue when you can test?

The video Science as a Strategy is available online and it is well worth watching.

Issues like the ones mentioned by Daniel are being discussed in the Data Analysis working group of the EU Project BIG. You should join the conversation!

Sometimes you are asked to print a document, sign and send back by e-mail. If it is a 25 page document, you don’t want to scan everything again. You can work only on the pages that need your signature.

1) Break the input PDF (unsigned) into pages. Assuming 25 pages in the original doc (large-document.pdf), do:

for i in $(seq -f "%04.0f" 25) ; do pdftk large-document.pdf cat $i output pg_$i.pdf ; done

As a result, you will get one pdf file per page, where the output is named pg_0001.pdf for page 1, …, pg_0025.pdf for page 25.

2) Print the only pages that need signature, sign and scan. For page 25, save as scanned_25.png

3) To convert the png to black and white, go to Gimp 2.6, menu “Tools / GEGL Operation / c2g” (color to gray). See: http://blog.wbou.de/2009/08/04/black-and-white-conversion-with-gegls-c2g-color2gray-in-gimp/

I also had to resize the images to 8.5 x 11 inches (US letter size).

4) To convert a signed page (scanned_25.png) to PDF use ImageMagick. Use the right file name so as to substitute the old pages (unsigned) by the signed ones:

convert -size 8.5x11 scanned_25.png pg_0025.pdf

5) To glue it all back together:

pdftk pg* cat output - > pg_all.pdf

On Windows, you can do that via Cygwin. Just make sure to install all necessary packages, including pdftk, imagimagick, etc.

In episode 6 of the RadioFreeHPC‘s podcast, they discuss a couple of examples of when Big Data goes bad. These are the articles mentioned:

- Analytics Gone Wrong: Dire Consequences for Kids

- JPMorgan Trading Loss May Reach $9 Billion

- Learning From Mistakes: Applying Big Data to Realtime Risk Analysis

Here are the conclusions I noted down (often with my own bias) from the podcast and from the articles:

- Be careful with conclusions: One cannot blindly follow any software. People making decisions based on software-generated advice should have at least a basic understanding of what data goes into the software and what are the assumptions made.

- Be careful with input: Parameters used in the software must be chosen with good reason.

- Be careful with output: Unexpected results must trigger further investigation. “Computer says no” [1] is no valid answer.

- Care about additional information/feedback: Do not discard any evidence that may contradict the initial findings without careful consideration.

- Be careful with decision making: Only enable people to execute the kinds of analysis that they are prepared to execute correctly.

- Care about visibility: make sure everybody that should see the data has seen it.

- Care to ‘connect the dots’: need an integrated view over different systems/datasets that influence some decision.

- Care about usability: “effectiveness of any technology is down to the people that use it.” Systems/recommendations must be understandable and easy to use by the people who make decisions, not system architects or statisticians.

- Be careful with complex systems: as systems get stacked over legacy systems, even IT personnel loses track of what exists and where. Care about simplifying the stack.

All of these topics and more are being discussed in the context of the EU FP7 BIG project. If you’re interested in these discussions, there are a few discussion lists that you can join to contribute to the conversation. For example, two groups relevant for the discussion above:

Although not in a conventional blog post style, I would like to share my notes from the (may I say, fantastic) Big Graph Data Panel at ISWC2012 in Boston, MA, USA. The panel was moderated by Frank van Harmelen and composed by Michael Stonebraker, Tim Berners-Lee, John Giannandrea and Bryan Thompson.

Disclaimers: Although I tried to be faithful to what I heard at the panel, please do not take the attributed sentences as word-by-word quotes. There is certainly some unmeasured amount of interpretation and paraphrasing that went into this. There is also bias towards topics (or rhetoric) that picked my interest, and unfortunately lack of attribution (or misattribution) due to the speed under which I was required to type all the notes as people were speaking. 🙂 I still hope the content is useful for your understanding of the topics.

What is Big Data? What do Semantic Web and Big Data offer to each other?

Stonebreaker: Big Data encompasses three types of data problem.

– volume: you have too much data. For example, all of the Web.

– speed: data comes at you too fast. For example, query logs, sensor data, etc.

– variety: you have too many sources of data. For example, a pharma company with thousands of spreadsheets each by individual researchers, no common language (German, English, Portuguese), different writing styles, vocabulary, etc.

In other words, as put by Deborah McGuiness in her tweet: Big Volume or Big Velocity or Big Variety. These “Three ‘Vs’ of Big Data” were originally posited by Gartner’s Doug Laney in a 2001 research report.

Stonebreaker: Big Data is only a problem if your data need grows faster than memory gets cheaper.

Giannandrea: First thing is to understand what a Graph Database even is.

Berners-Lee: everything can be structured in a graph. Saying graph structured data is like saying “data data”.

Do we even need graph databases? Don’t relational database systems already solve everything?

Stonebreaker: “major DB vendors are 30 years old obsolete systems that are not good at anything”.

Stonebreaker: unsolved problem how to do graph problems at scale, meaning that whatever aggregate memory you have cannot fit all.

Paraphrasing Deborah McGuiness‘ quoting of Stonebraker: Let the benchmark wars begin. If winners are 10x better, then you survive (if they are only 2x better, than the giant companies will take you over).

Would anybody with Big Graph Data problems use SPARQL? Or even SQL? Or must it be MapReduce?

Stonebreaker: About SPARQL, don’t get hung up on your query language. In the Hadoop world, everybody is moving to Hive. Hence, all SQL vendors are starting to write Hive2SQL translators.

Stonebreaker: About MapReduce, it is not the final answer. Google wrote mapreduce 7 years ago. It is good at embarrassingly parallel tasks. Joins are not embarrassingly parallel.

What about Open Data? Is there any incentive for it?

Van Harmelen: “standard anecdote: the incentive for opening up your data is that if you get successful, your servers burn down.”

Stonebreaker: Biggest problem is trying to put stuff together after the fact that was not designed to be put together.

Stonebreaker: deduplicating fuzzy data is one of the killer problems.

Stonebreaker: “there is tremendous value in curating the data.”

Sheth: explicit, named relationships make deduplication easier.

What about the original Semantic Web idea, of querying a distributed graph on the Web?

Stonebreaker: “query response time in the distributed way is as slow as the slowest provider. people centralize to speed it up.”

Attendee: “centralizing only makes sense if you know what u want to do. Putting data out on the Web enables people to find it out.”

Someone (I think Thompson?): “Semantic Web research needs to find out what is the right bit of “well curated data/process/schema/query” to add on top of big data.”

My question to the panel: What will Big Graph Data look like in 2022? Solved or beginning? Volume? In silos or global?

Giannandrea: in 2022 we will understand data better

Thompson: we will still not get the semantic interoperability across systems by 2022

Stonebreaker: big data will be a bigger and bigger problem at least for the next decade

Berners-Lee: there is a battle of small vocabularies for interoperability coming in the next years

Participate! If you would like to continue discussing the future of Big Graph Data, you should join the mailing lists of the FP7 BIG Project. The Working Groups on Data Analysis and Data Storage are particularly relevant to this discussion. Check them out!

I had to answer this question more than once, so it’s probably worth posting in case somebody else out there is looking for the answer.

Are you getting this error message when trying to run a class that uses Log4J?

log4j:WARN No appenders could be found for logger (ldif.local.Ldif$). log4j:WARN Please initialize the log4j system properly.

Are you specifying a pointer to your log4j.properties file like this?

java -Xmx2G -Xms256M -Dlog4j.configuration=file:../resources/log4j.properties [MainClass]

The part that most people forget is that “file:” before the path to log4j.properties. Check that once more and try again. 🙂

If you are using IntelliJ IDEA, the place to set that is under “Run / Edit Configurations / (choose your class under Applications) / VM options”.

Wasted about 2 hours of my time with this, so I’d like to spare you the same frustration.

- Go to “Start > Run”

- Type “cmd” to open the command prompt

- Paste the line below to disable the “Internet Connection Sharing (ICS)” (saving you the usual multiple button clicking hunt on Windows)

sc stop SharedAccess - Now open Cisco VPN and it should work.

Sources:

http://www.lamnk.com/blog/vpn/how-to-fix-cisco-vpn-client-error-442-on-windows-vista/

http://www.jasonn.com/enable_windows_services_command_line

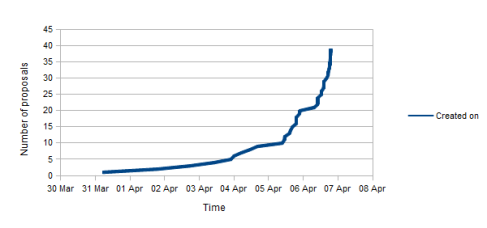

This year Google’s Summer of Code has “had a record 6,685 student proposals from 4,258 students submitted to this year’s 180 participating mentoring organizations.” DBpedia Spotlight is participating this year for the first time. We were thrilled to watch and interact with prospective students as 48 applications gradually showed up in the system, culminating with an almost chaotic number of applications on the last day.

Chart based on a spreadsheet kindly shared by Olly.

Excluding proposals that we considered spam (incomplete, copy+paste, unrelated, etc.), about 30 applications remained, including “ok”, “good”, “very good” and several “amazing” ones! Google will announce later today how many students we will get, based on their available budget. But I can already tell you that it will be a tough choice for us!

The most popular ideas were Topical Classification and Hadoop-based Indexing, followed by Internationalization, Spotting and Disambiguation. Surprisingly, we got far fewer “obvious combination” proposals than we expected — that is, proposals combining ideas that we thought would be perfect matches. Many students really put time into understanding the system and our ideas, and we were generally quite impressed with everybody’s interest and energy. Some students also went the extra mile and combined their ideas with ours. Overall, a really exciting summer is already rising above the horizon for us!